805 字

4 分钟

Ubuntu 22.04+8*A800 Ollama 运行deepseek-r1

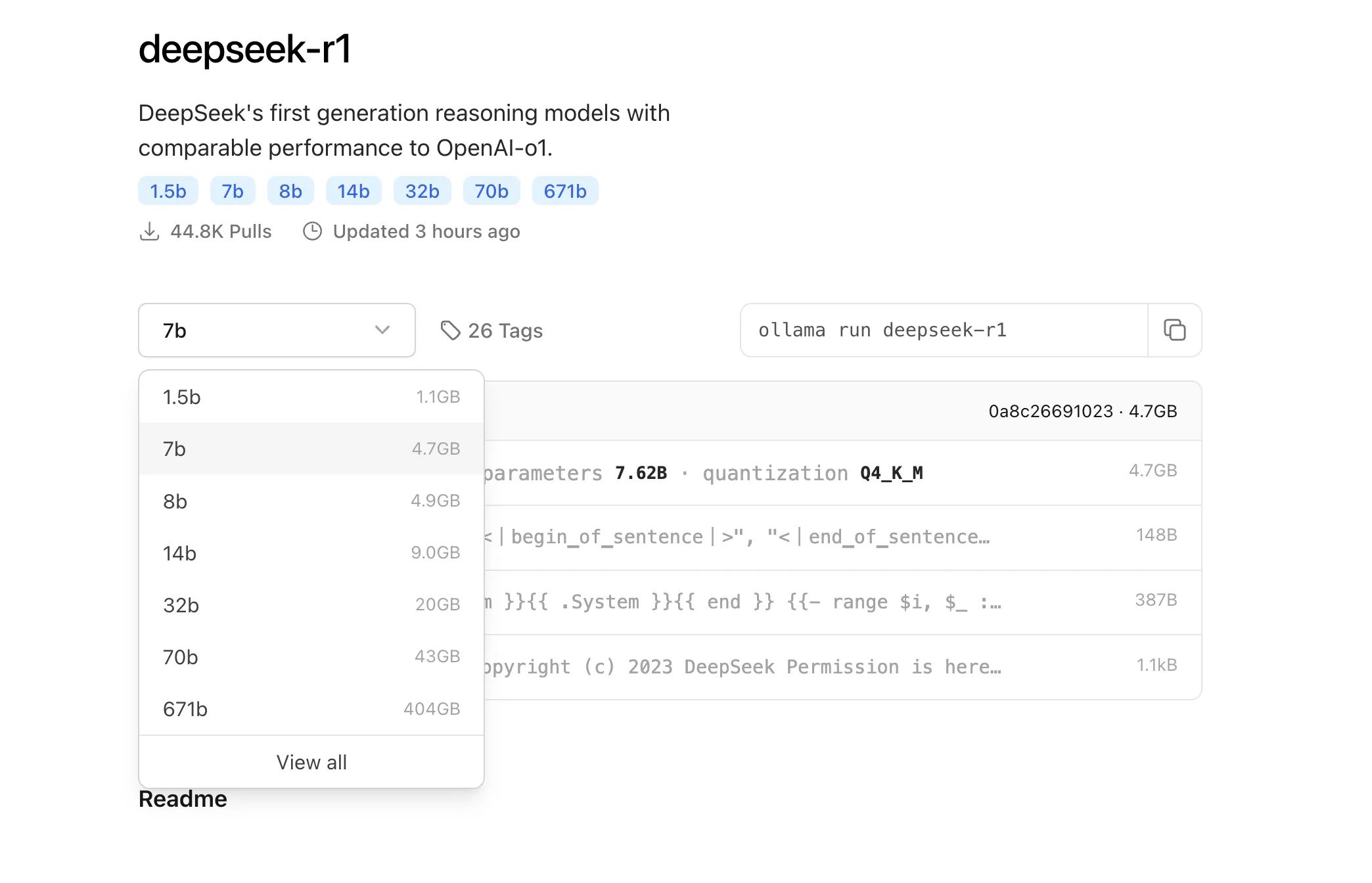

发现 deepseek-r1 的 617B 我的机器刚好满足条件,本着闲着也是闲着,测试一下。

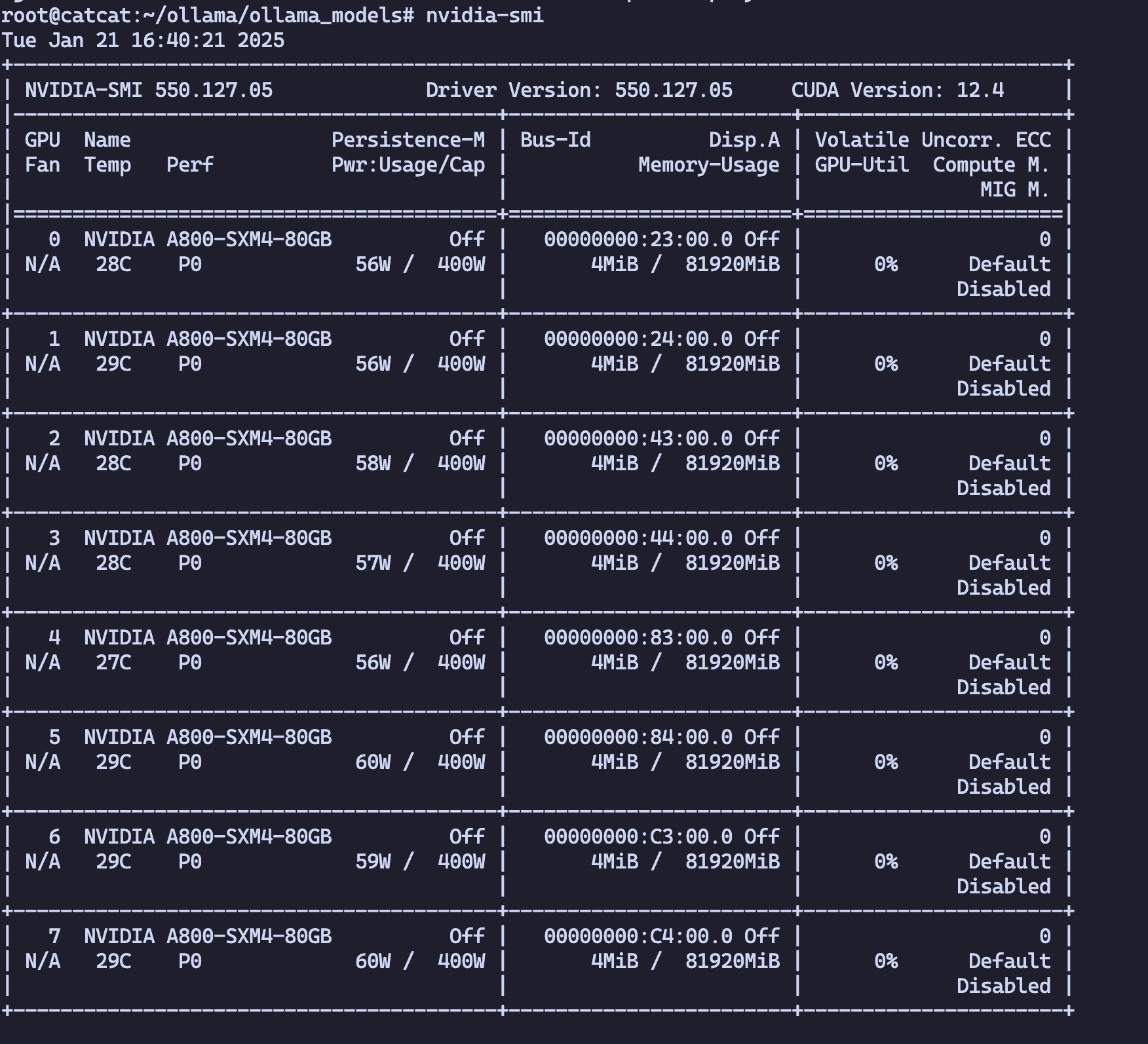

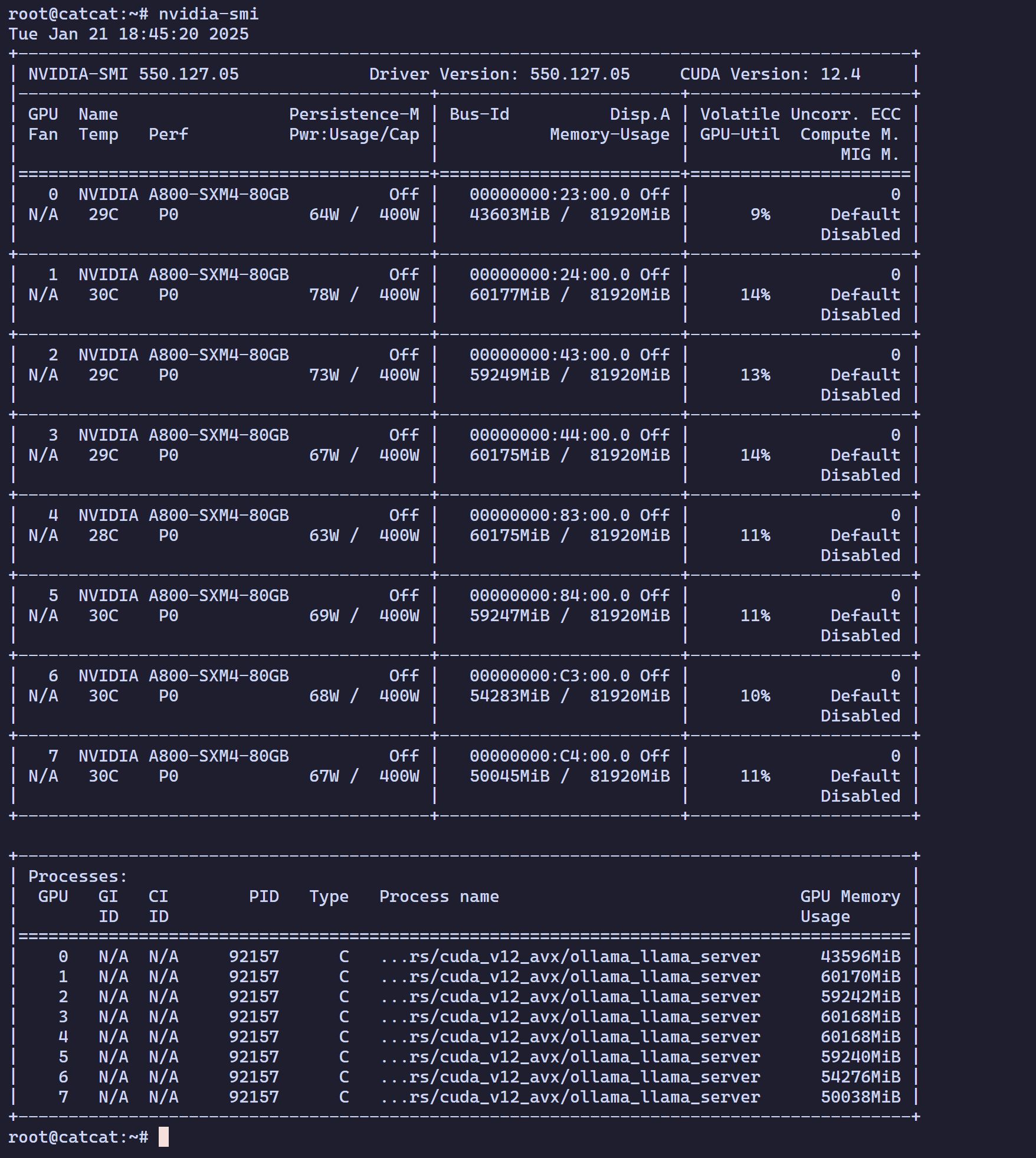

系统硬件介绍

-

Processor : 2*Intel(R) Xeon(R) Platinum 8362 CPU @ 2.80GHz

-

_Num of Core : 128 Cor_e

-

Memory : 1024 GB

-

Storage : 1.5T NVMe

-

GPU : 8*A800

-

NVIDIA-SMI 550.127.05

-

Driver Version: 550.127.05

-

CUDA Version: 12.4

下载 Ollama

访问下载: https://ollama.com/

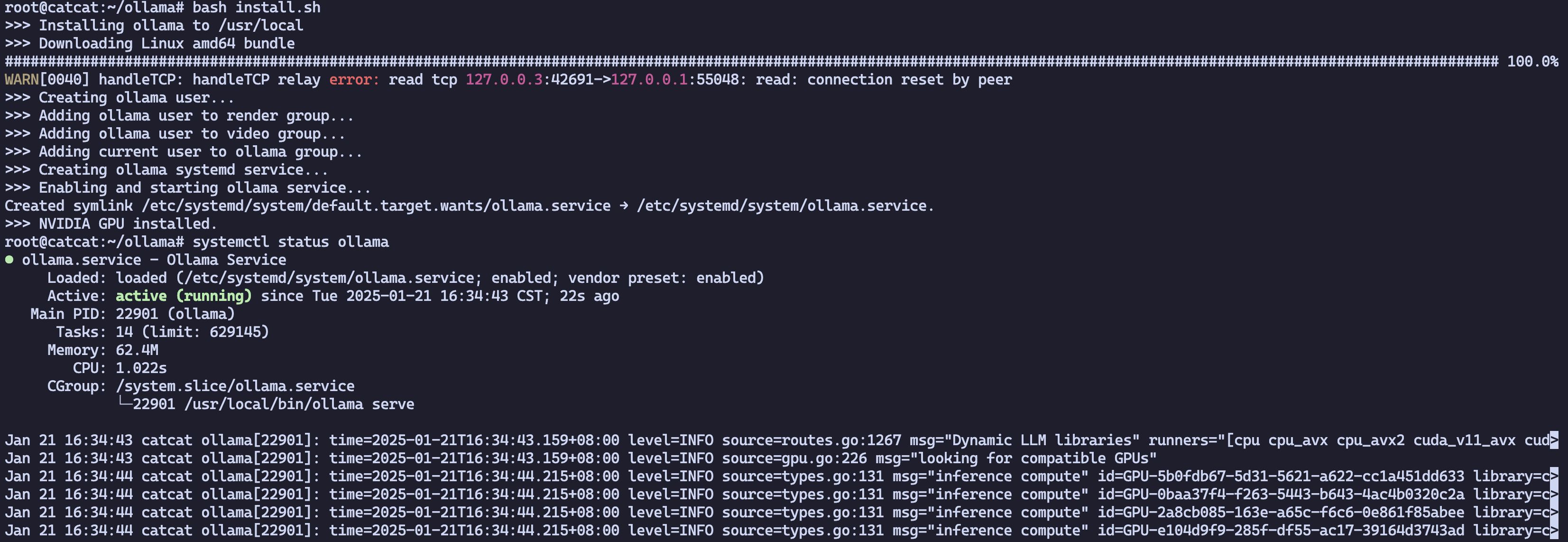

安装Ollama

直接借用官方脚本

curl -fsSL https://ollama.com/install.sh | sh

配置模型下载路径

mkdir -p /root/ollama/ollama_models并且添加到 ollama 中

如果开始没配置OLLAMA_MODELS ,默认路径是/usr/share/ollama/.ollama/models

vim .bashrcexport OLLAMA_MODELS=/root/ollama/ollama_models启动ollama服务

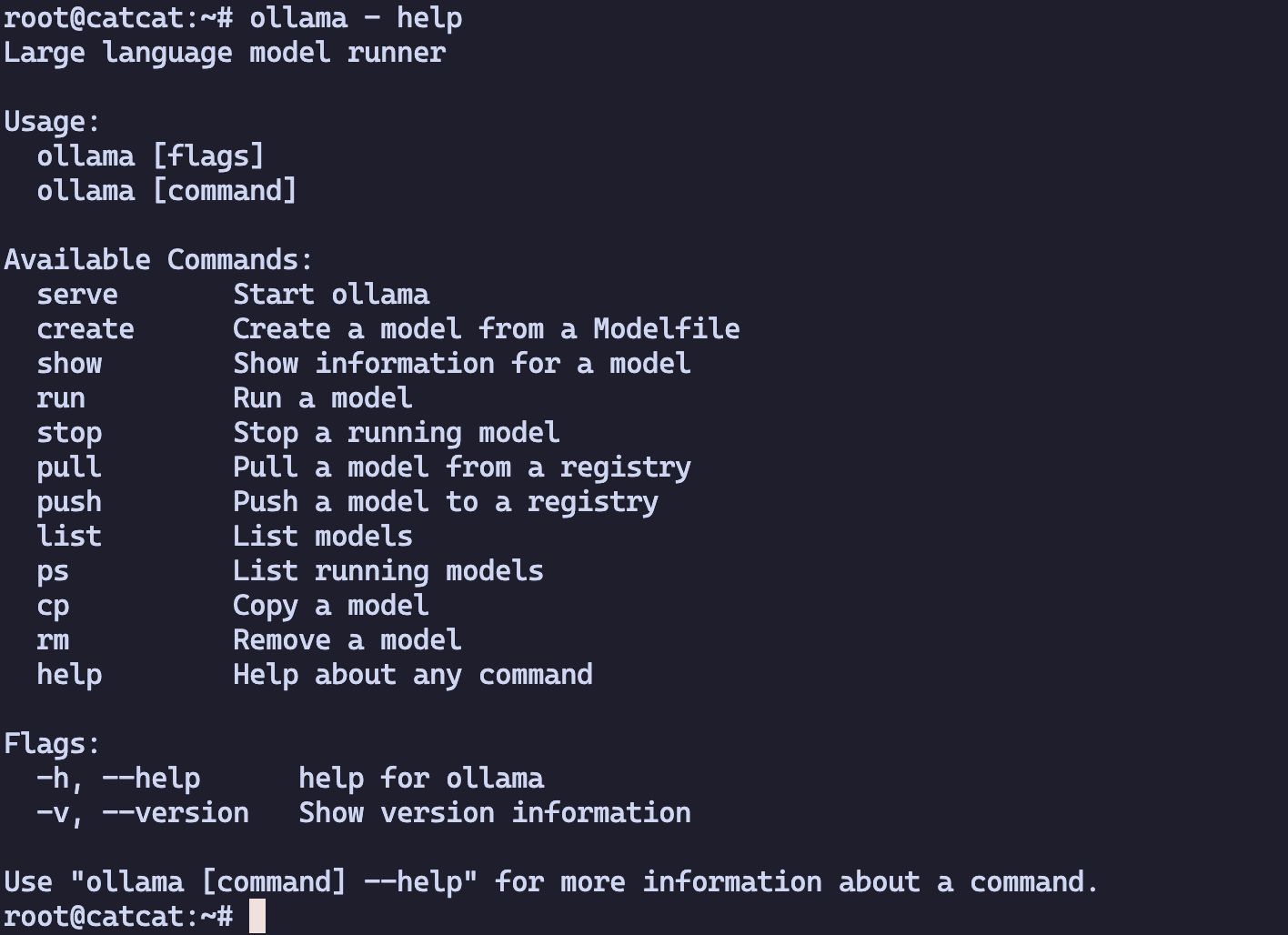

运行 Ollama

ollama server

修改ollama 配置

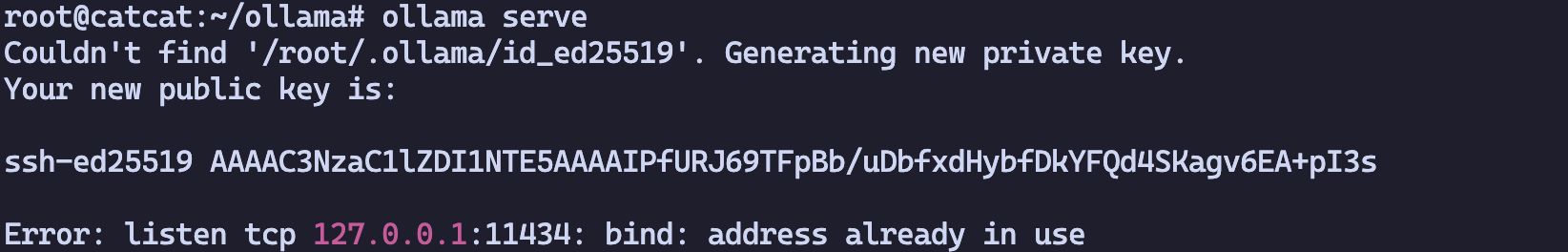

默认情况下,Ollama只会关注localhost的11434端口,因此只能从localhost访问。

vim /etc/systemd/system/ollama.service在 [Service] 下添加 Environment="OLLAMA_HOST=0.0.0.0"cat /etc/systemd/system/ollama.service[Unit]Description=Ollama ServiceAfter=network-online.target[Service]ExecStart=/usr/local/bin/ollama serveUser=ollamaGroup=ollamaRestart=alwaysRestartSec=3Environment="PATH=/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin"Environment="OLLAMA_HOST=0.0.0.0"[Install]WantedBy=default.target重启 ollama

systemctl daemon-reloadsystemctl restart ollama关闭服务systemctl stop ollama启动服务systemctl start ollama运行模型

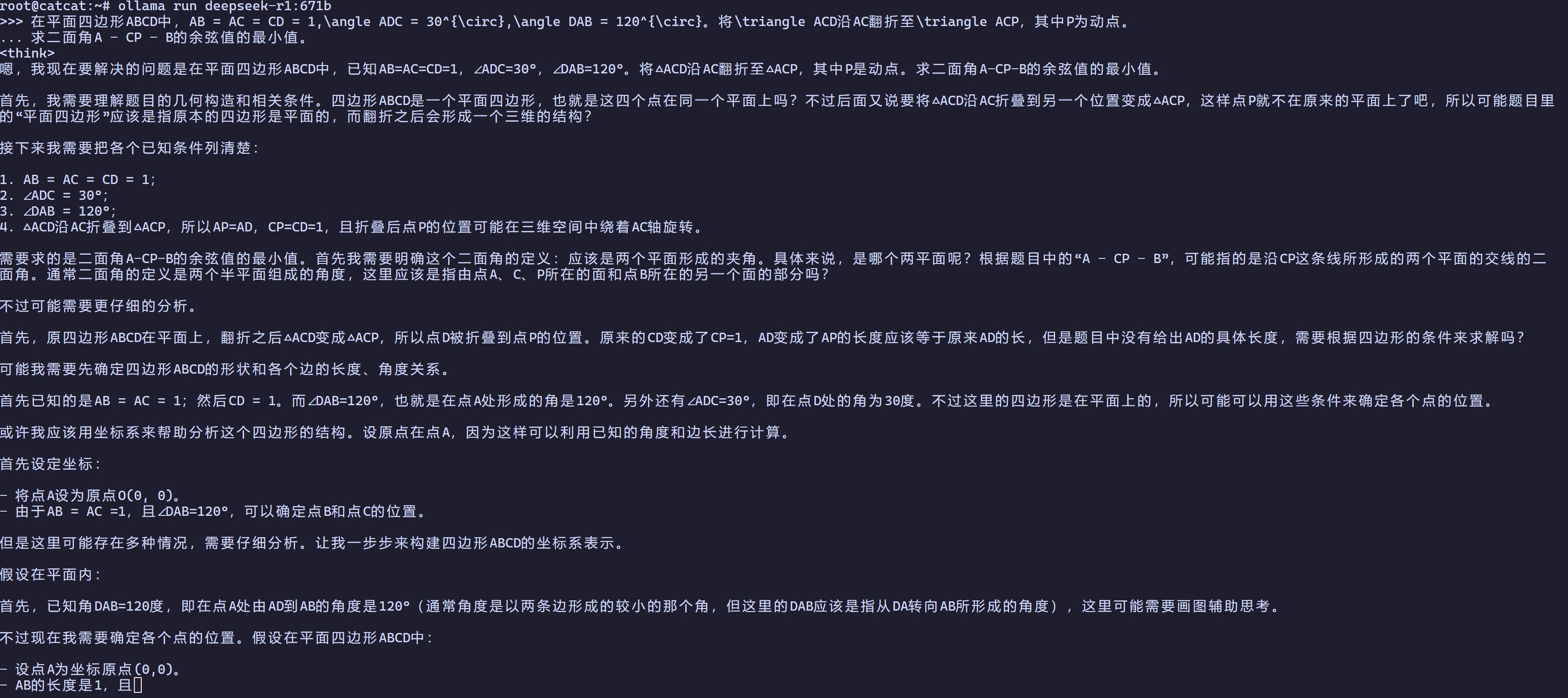

ollama run deepseek-r1:671b

配置 Docker + Nvidia-docker2

安装 Docker

export DOWNLOAD_URL="https://mirrors.tuna.tsinghua.edu.cn/docker-ce"curl -fsSL https://raw.githubusercontent.com/docker/docker-install/master/install.sh | sh安装 GPU-Docker 组件

安装 gpu-docekr

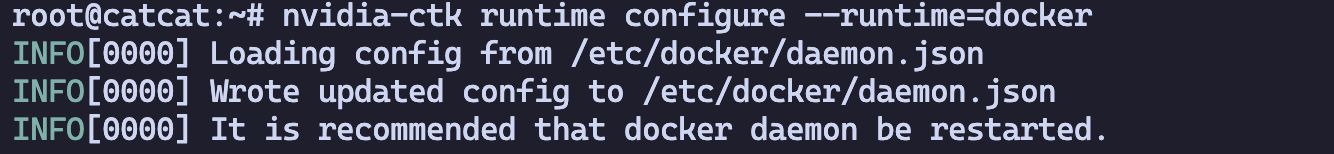

apt-get install -y nvidia-docker2nvidia-ctk runtime configure --runtime=docker

这个会修改 daemon.json 文件,增加容器运行时

配置 Docker 参数

root@catcat:~# cat /etc/docker/daemon.json{ "data-root": "/root/docker_data", "experimental": true, "log-driver": "json-file", "log-opts": { "max-file": "3", "max-size": "20m" }, "registry-mirrors": [ "https://docker.1ms.run" ], "runtimes": { "nvidia": { "args": [], "path": "nvidia-container-runtime" } }}测试

docker run --rm -it --gpus all ubuntu:22.04 /bin/bashroot@catcat:~# docker run --rm -it --gpus all ubuntu:22.04 /bin/bashUnable to find image 'ubuntu:22.04' locally22.04: Pulling from library/ubuntu6414378b6477: Pull completeDigest: sha256:0e5e4a57c2499249aafc3b40fcd541e9a456aab7296681a3994d631587203f97Status: Downloaded newer image for ubuntu:22.04root@e36b1bb454b6:/# nvidia-smiWed Jan 22 02:03:29 2025+-----------------------------------------------------------------------------------------+| NVIDIA-SMI 550.127.05 Driver Version: 550.127.05 CUDA Version: 12.4 ||-----------------------------------------+------------------------+----------------------+| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC || Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. || | | MIG M. ||=========================================+========================+======================|| 0 NVIDIA A800-SXM4-80GB Off | 00000000:23:00.0 Off | 0 || N/A 29C P0 56W / 400W | 4MiB / 81920MiB | 0% Default || | | Disabled |+-----------------------------------------+------------------------+----------------------+| 1 NVIDIA A800-SXM4-80GB Off | 00000000:24:00.0 Off | 0 || N/A 29C P0 56W / 400W | 4MiB / 81920MiB | 0% Default || | | Disabled |+-----------------------------------------+------------------------+----------------------+| 2 NVIDIA A800-SXM4-80GB Off | 00000000:43:00.0 Off | 0 || N/A 28C P0 57W / 400W | 4MiB / 81920MiB | 0% Default || | | Disabled |+-----------------------------------------+------------------------+----------------------+| 3 NVIDIA A800-SXM4-80GB Off | 00000000:44:00.0 Off | 0 || N/A 28C P0 58W / 400W | 4MiB / 81920MiB | 0% Default || | | Disabled |+-----------------------------------------+------------------------+----------------------+| 4 NVIDIA A800-SXM4-80GB Off | 00000000:83:00.0 Off | 0 || N/A 28C P0 57W / 400W | 4MiB / 81920MiB | 0% Default || | | Disabled |+-----------------------------------------+------------------------+----------------------+| 5 NVIDIA A800-SXM4-80GB Off | 00000000:84:00.0 Off | 0 || N/A 29C P0 60W / 400W | 4MiB / 81920MiB | 0% Default || | | Disabled |+-----------------------------------------+------------------------+----------------------+| 6 NVIDIA A800-SXM4-80GB Off | 00000000:C3:00.0 Off | 0 || N/A 29C P0 59W / 400W | 4MiB / 81920MiB | 0% Default || | | Disabled |+-----------------------------------------+------------------------+----------------------+| 7 NVIDIA A800-SXM4-80GB Off | 00000000:C4:00.0 Off | 0 || N/A 29C P0 60W / 400W | 4MiB / 81920MiB | 0% Default || | | Disabled |+-----------------------------------------+------------------------+----------------------+

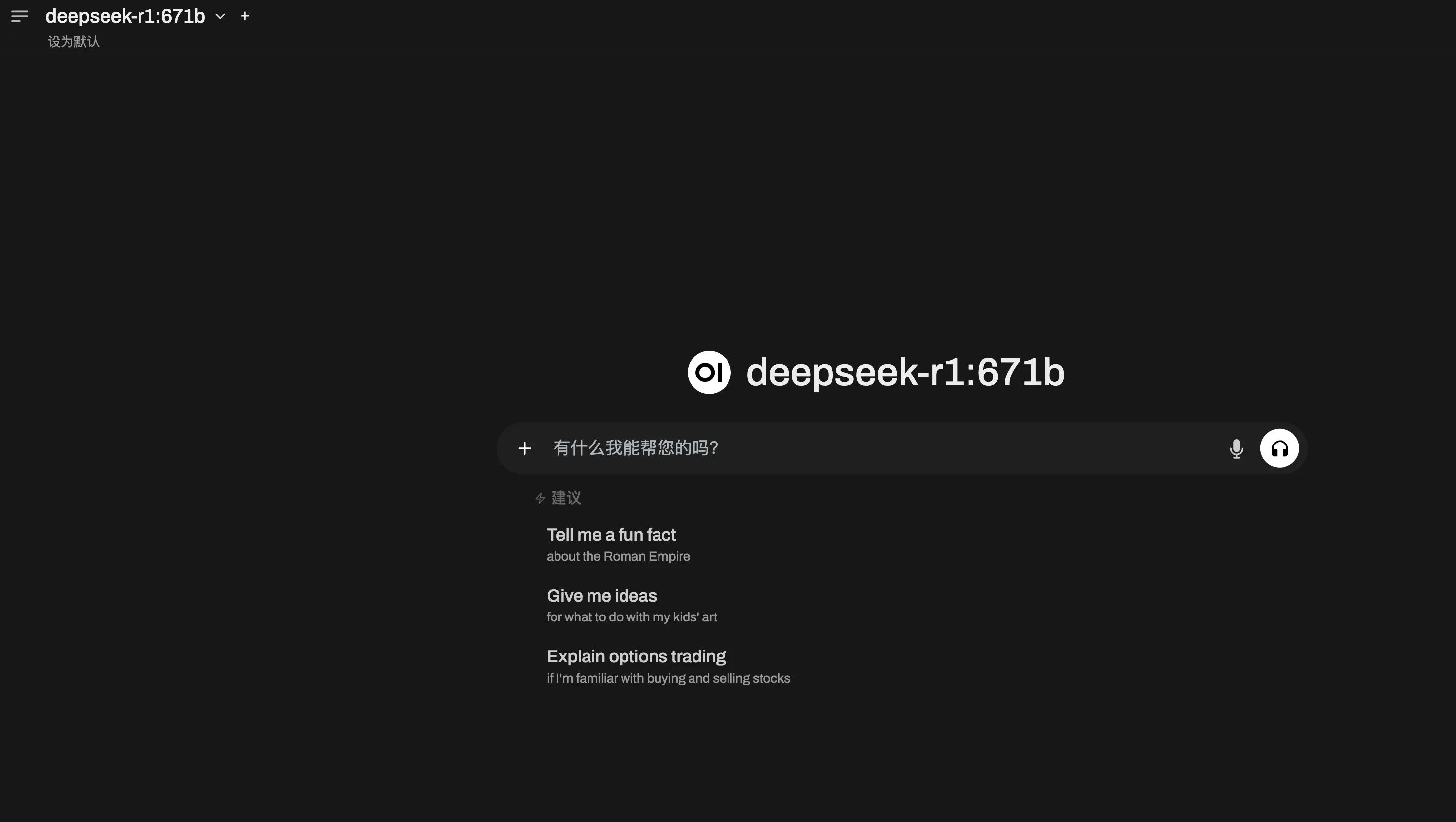

+-----------------------------------------------------------------------------------------+| Processes: || GPU GI CI PID Type Process name GPU Memory || ID ID Usage ||=========================================================================================|| No running processes found |+-----------------------------------------------------------------------------------------+部署 Open WebUI

version: '3.8'

services: open-webui: image: ghcr.sakiko.de/open-webui/open-webui:main container_name: open-webui restart: always ports: - "3000:8080" volumes: - open-webui:/app/backend/data extra_hosts: - "host.docker.internal:host-gateway"

volumes: open-webui:

Ubuntu 22.04+8*A800 Ollama 运行deepseek-r1

https://catcat.blog/ubuntu-22-048a800-ollama-deepseek-r1